It’s been a while since I wrote about SSIS – most of what we do these days is happening in the cloud.

But, sometimes, we still need to resort to the old good time-proven technologies… such as SSIS.

What’s the de-facto standard toolset of any Dynamics/PowerPlatform consultant who need to use SSIS? Well, that’s, of course, SSIS itself and Kingsway connector in particular.

If you ever had to do the following:

- Read rows from the file

- Adjust Dynamics CRM Source query for each line to query data from one environment

- So you could push certain changes to another environment

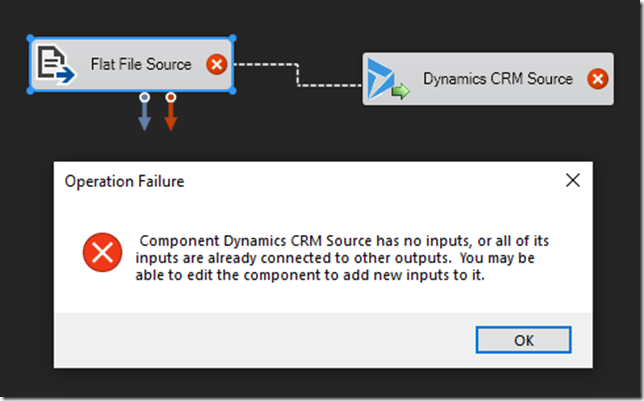

You probably know that Dynamics CRM Source does not accept inputs, so you can’t just use a File Source and connect File Source’s output to the Dynamics CRM Source’s input:

Actually, this does not have anything to do with Kingsway, since other data sources (OleDB, for instance), would have exactly the same behavior.

I don’t know if I’ll ever get a chance to write about it again given that I only need to dig into SSIS every so often these days, so, here is a workaround just for the record.

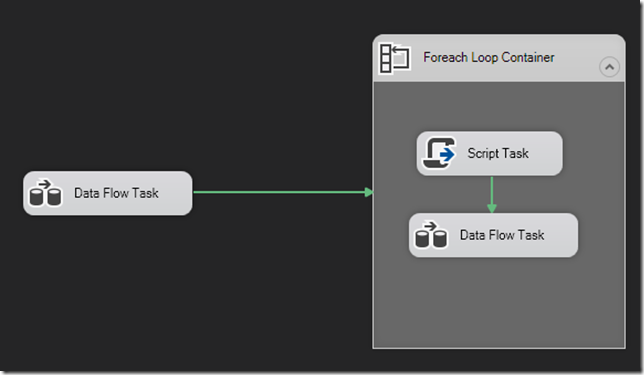

Instead of doing everything in the same data flow task, you can do this:

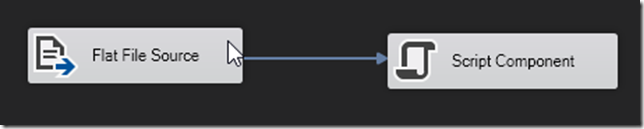

In the first task there, read data from the file and push all file lines to an object variable using a script component:

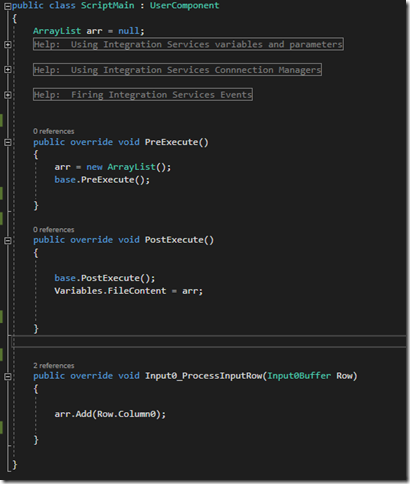

Here is how the script looks like:

So, basically, just push everything to an array list, and pass it back to the package through a variable.

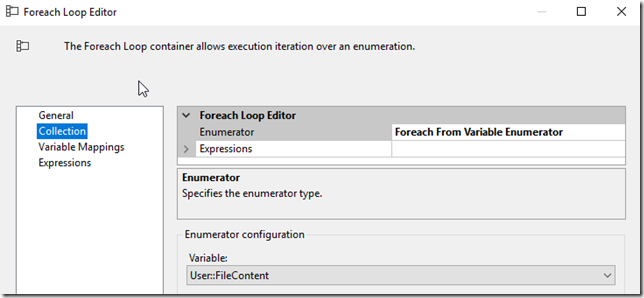

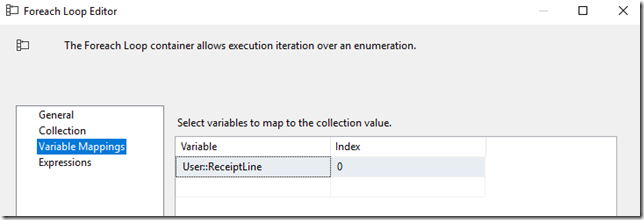

From there, set up a For Each Loop Container to work off your variable:

For every iteration, you’ll need to push each individual line to another variable:

From there, you can now work with each row separately in the loop. This should also be applicable to other data sources – it could even be Dynamics CRM Source originally, though I’m not sure how to pass complex objects through the enumeration in this case.

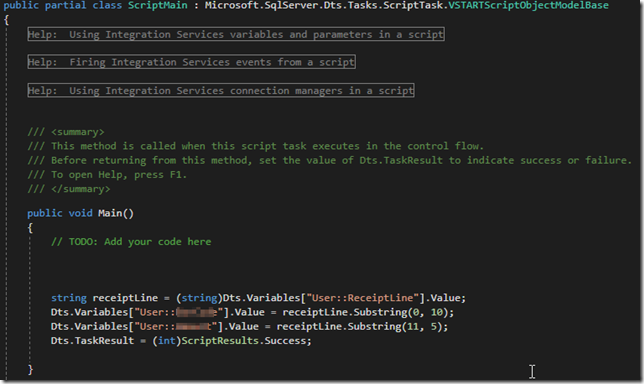

Anyways, since we now have individual file row in the variable, we can use a Script Task to parse that variable line and populate additional variables, and, from there, we can use those other variables in the other tasks within each iteration to query Dataverse and/or to do whatever else we need there.

And the end result is that I can now use those variables to tweak FetchXml as required for all Dynamics CRM Sources in those other tasks within the Foreach Loop Container.

That seems to be a lot of work for a simple task… and I can’t help but notice that I could probably do this in Power Automate more efficiently. But, well, we don’t always have the choice of technology, so, if you have to do this with SSIS, this might help.

It would be interesting if you could please share how Power Automate does it more efficiently. This is a very common ETL design issue, I don’t know if Power Automate would be much an exception except Power Automate isn’t necessarily an ETL tool to begin with anyway.