In one of the earlier posts this month I was seemingly unhappy about the missing “incremental refresh” option: https://www.itaintboring.com/dynamics-crm/power-platform-dataflows-have-i-missed-incremental-refresh-option-somehow/

But, having thought about it a bit more, I don’t think it’s such a big issue. Not when working with the SQL data sources, at least.

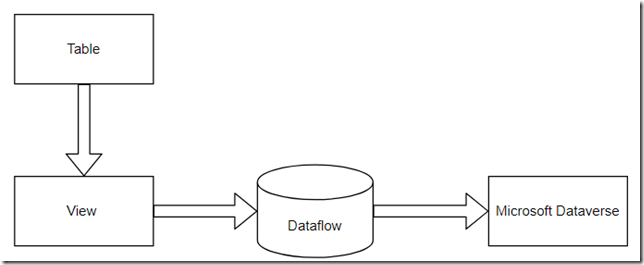

All we really need is a view that will pre-filter source data:

So, I could just create a view like this:

CREATE VIEW ETLTest_Modified

AS

SELECT * FROM ETLTest

WHERE DATEADD(minute, 360, ModifiedOn) > GETUTCDATE()

And I could use it as a source table for the dataflow to limit the amount of data this dataflow has to transfer to Dataverse on every run.

This would do it, since, once the dataflow starts, it would grab 6 hours(in the example above) of modified data, and it’ll push it to the Dataverse table.

I might still see a bit of excessive utilization on the API side, though, since, if dataflow refresh time is not aligned properly with the view, the dataflow might still be loading data that’s already been loaded.

For example:

- We might set up the view to only show data that was modified in the last 2 hours

- We might want that data to go to Dataverse every 5 minutes

- So, on the first run, the dataflow will take 2 hours of data and push it to Dataverse

- On the second run (which would be 5 minutes later), the same dataflow would take 2 hours of data again… but that would include 1 hr 55 minutes of data that’s already been pushed to the Dataverse on the previous run

Depending on how often new data will be coming into the SQL table(and, also, depending on how often we want to push it to the Dataverse), though, this might or might not be a problem. For example, if that SQL table is only updated once a day at a specific time, we could set up the Dataflow to refresh 3 hours after the scheduled SQL table update, and, except for some rare occasions where SQL table is not updated on time, this would be a good enough approximation of the incremental refresh.

Also, there is one feature which is currently in preview and which might make this whole problem irrelevant, but, it seems, it’s not working with Power Platform Dataflows yet:

Somehow, when using “refresh dataflow” action, I’m not able to pick the environment where my Dataflow is – it only works with the default environment, it seems.

If this worked, I could just delegate “scheduling” part to the Power Automate, in which case I could do it like this:

- Update my View above to only include data where “modifiedon” is between start and end dates

- Those start and end dates might come from another table

- In the scheduled Power Automate flow, I could set those “start” and “end” dates, then use “dataflow refresh” action to refresh the dataflow

- For the next scheduled run, I’d user previous “end” date as a “start” date, and “current time” as an “end date”

This would have taken care of the incremental refresh.

So… just waiting for that action to start working properly in my tenant![]()