The question of setting up a branching strategy when doing development in Dataverse comes up almost inevitably. It’s a discussion that happens at a certain point, and it can be happening on the same project a number of times as people are joining the project.

Would probably make sense to explain why we are having this issue (and, as to “whether we have a good solution”, I think it depends on the project requirements).

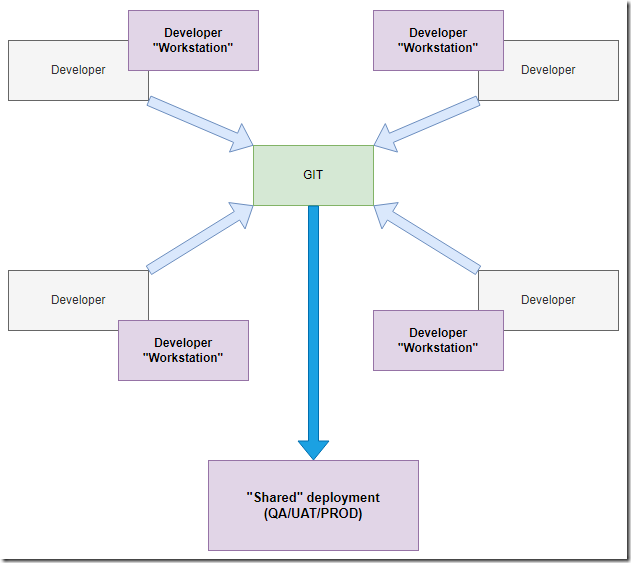

The first diagram below shows your classic project where every developer would have their own “workstation” to do development, and they would have their local execution environment on those workstations.

Once the changes are ready, they would be pushed into git, and, from there, they would go to the QA/UAT/Prod environments.

This also allows for branching, for instance, since every developer can work on their own branch in git. After all, there is separate execution environment per developer in this scenario, and that’s important since every developer can maintain their own execution environment.

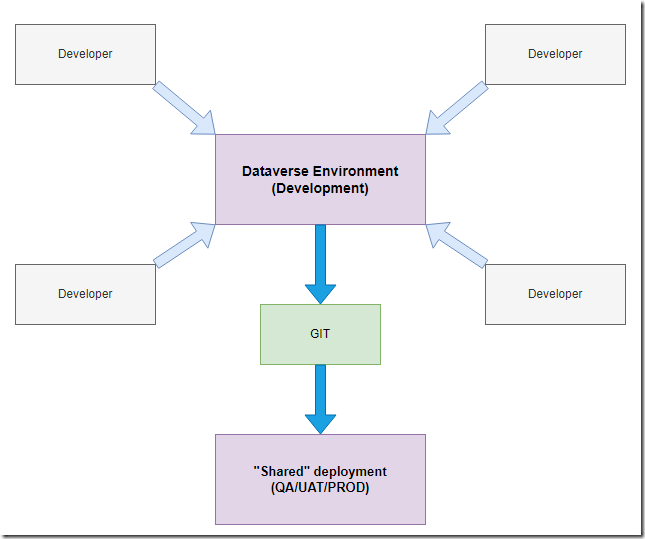

However, once we have switched to Dataverse / Power Platform, it all becomes different. Every developer would still have a workstation, but they might now be sharing the execution environment:

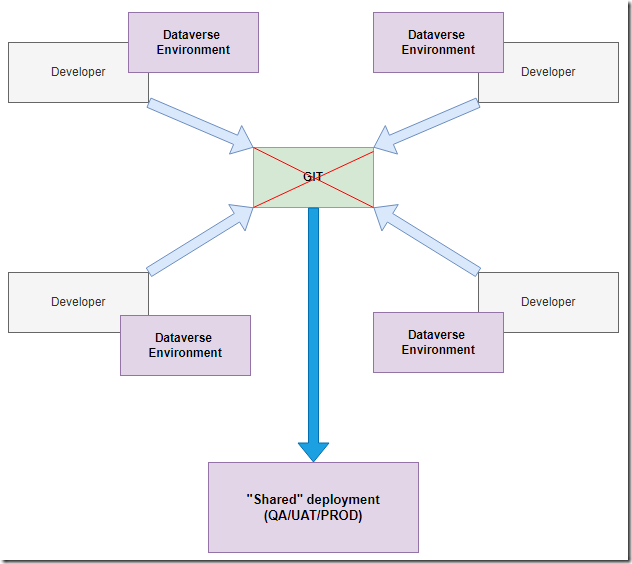

Which makes branching in git nearly impossible. So, of course, you might try changing it a little more. Why don’t we have a power platform/dataverse environment per developer?

This may work better, but you’ll have to figure out the source control, since you won’t be making “code” changes in those individual development environments – you’ll be making configuration and customization changes, and this is all supposed to be transported through the dataverse solutions.

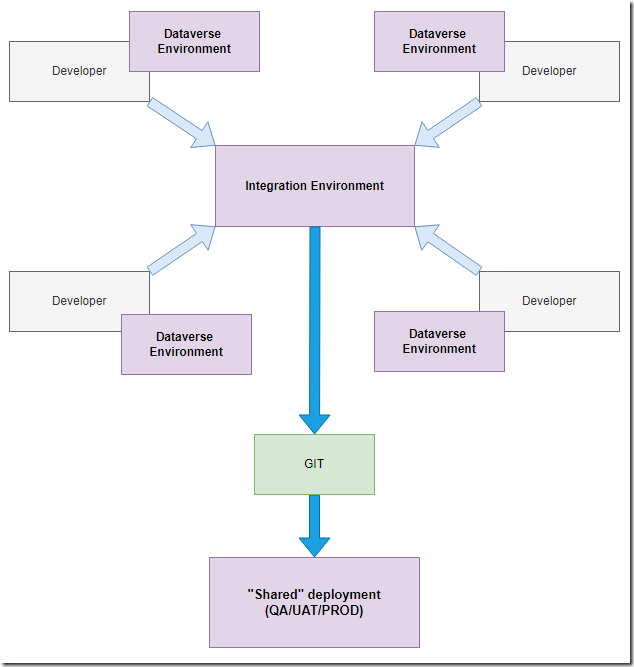

Which are still far from perfect when it comes to branching and merging support in git, and, more often than not, that last mile between local development environments and git will have to be covered by manually re-implementing changes in some kind of “integration environment”:

That’s when you may finally have a somewhat similar setup (similar to the classic development), but this involves a few more power platform environments now, and this also involves that manual/semi-manual step of pushing ready-to-deploy changes to the “integration”.

Dynamics/PowerPlatform has always had the shared developer environment problem.

I have often seen the solution being a shared environment and then you are ready you move only your change into a Dev master. Then when the change is made you do a pull request that only has your changes.

If you don’t have a dev master then it’s a pain to know the individual changes for a particular story or developer. You are instead taking a clump of changes from all the developers because sometimes you can keep the changes separate.

Although we did about such things, often we don’t need to be that accurate, source control is to track changes and allow us to roll back.

Good article

Maybe a usefull addition is our workflow with Azure DevOps where git is between the seperated dev dataverse instances and the integration instance.

DEV

Dev 1 => Dataverse Dev 1 => Azure Devops pipeline (export unmanaged solution, unpack en commit) => Git branche Dev 1

Dev 2 .==> Dataverse Dev 2 => … => git branch Dev 2

MERGE

Git Dev 1. –|

Merge dev 1 & 2 branch in Merge branch ==> Azure Devops pipeline (pack solution, import in integration Dataverse

Git Dev2 –|

DEPLOY

Dataverse integration ==> Azure DevOps pipeline (export as managed solution, store as artifact)

Azure Release pipline ==> artifact import in UAT / Production