I wrote my previous post knowing full well it was going to sound controversial to any pro-dev, that’s if a pro-dev were to read it. Turned out a fellow MVP, Daryl LaBar, did, and he just raised the bar by responding with a well-articulated article of his own:

https://dotnetdust.blogspot.com/2021/09/Long-Functions-Are-Always-A-Code-Smell.html

Before I continue, I have to mention that I would often question seemingly obvious things – sometimes, that leads to useful findings. Other times, those question end up being dismissed easily, and the life just goes on. No harm done.

In this case, I am definitely not implying that “long code” is inherently better for the plugins, but, having read Daryl’s post, I am still not convinced it’s inherently worse either, so I’ll try to be Devil’s advocate and explain why.

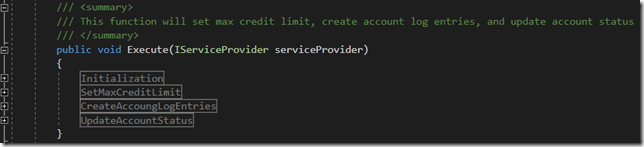

First of all, that analogy with the book makes sense, but only to an extent. There are other practices which can improve readability and understanding – I could add documentation-style comment just before the execute method, and, then, I could use regions in the plugin to isolate pieces of code in the same manner it’s done with the functions:

Would there be any benefits from the readability perspective? Not necessarily (except that, yes, I don’t need to go back and forth and can follow plugin logic from start to end). Would there be drawbacks from the readability standpoint? It seems it’s the same answer – not necessarily.

Would there be some pieces of code where a function would work better? Of course – when that piece is reusable. But, then, the assumption so far is that not a lot of code is, really, re-usable in the plugins.

Now, Daryl writes that having smaller functions is beneficial for error logging since function names show up in the log:

“if the 300 lines where split into 10 functions of 30 lines, then I’d know the function that would be causing the error and would only have a tenth of the code to analyze for null ref. That’s huge!”

There is nothing wrong with that, but here is what usually happens:

- A bug will show up in production

- We will need to make sure we have a repro to start with

- At which point we will start debugging to pinpoint the line of code that’s causing the error

Let’s assume we have 30 lines of code – in order to identify one specific line (let’s say we can’t guess easily), we still need to isolate that line to be able to fix it. So, we will either have to add additional diagnostics to the code, or we will try to guess and build different versions of the plugin to see which one stops failing.

If we had to add diagnostics/tracing to each and every line where, potentially, a “null reference” error might happen, an argument could be made that all such lines in the plugin should be instrumented just as a best practice (to make sure we have all required into the next time an error happens in production).

Which, then, negates the difference between having a function and not having it.

If we went with guessing above (let’s say we had 5 educated guesses about which line, exactly, is failing), then we’d need to build 5 versions of the plugin (worst case). Which is not that far from having to build 7-8 versions if we just tried to split our code in half and see if we can still reach that point when running the test (since 2^8 =256). However, with 7-8 runs, we’ll know exactly where the error is happening. And, with those 5 educated guesses we will often end up just where we started; since we might have guessed wrong to start with.

There is a potential problem with that, of course, and it’s all those “if” statements, since they can quickly confuse this kind of “divide and conquer” strategy. And this is where I’d agree with Daryl – should not have complex “if” structures in the long code, or, at least, should try making those if-s sequential, as in:

if(…) {};

if(…) {};

Instead of

if(…){

if(…){

}

}

But that’s not the same as not having shorter functions.

Also, I would often add almost line-by-line tracing to the plugin. Once it’s done, it does not matter how long the function is, and/or how complex those if-s are; since you will have enough details in the error message to land on the block of code where the error occurred.

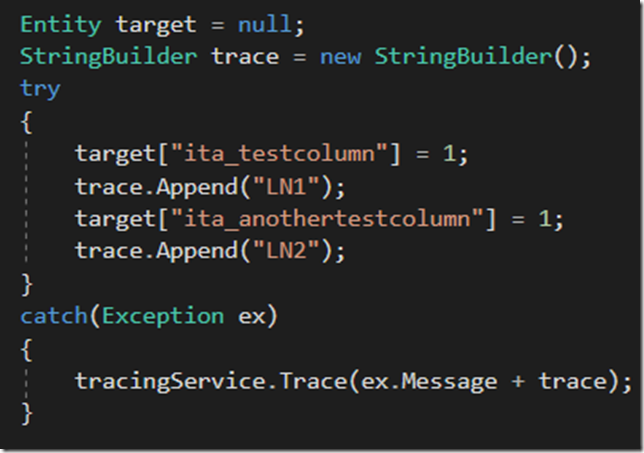

It’s taken to the extreme in the example below, but, if you absolutely need to know where your plugin fails without having to guess, that’s more or less how you’d do it:

That said, do I always do it? Not at all. I’d often start doing it once there is an issue, and, then, I’d keep that tracing in the plugin. Till there is another issue when I’ll add more. Etc.

Of course if we were able to actually debug plugins, but, well… and I don’t mean plugin profiler, though it can be quite useful sometimes.

In the last part of his post, Daryl is talking about unit-testing and cherry-picking what to test. Which makes sense to me, but I’d just add that, with very limited amount of reusable code (that’s the basic assumption here), everything else should probably be tested as a whole anyways (in which case shorter functions vs longer functions difference might not be that important). This is because, for instance, it’s very likely that one short function will affect what’s happening in the other short function. As in:

- We would set max credit limit

- We would create account log entries (possibly based on what we did above)

- And we would update account status (might be a VIP or regular account… based on the credit limit we set above)

Admittedly, I don’t use unit-testing that much in the plugins – would normally prefer integration testing with Easy Repro (and, quite often, no matter what I prefer, dev testing would still be the last thing we ever get to do on the project. Which is not great at all, of course, but this is a different story).