First, there were people talking to each other. It was hard to pass knowledge around other than from one person to another directly.

Then, there was writing. One person would write something, and others would read it. Those other ones would not necessarily need to explain everything that’s already been written to someone else, they’d just need to reference that written material. We got libraries, peer reviews, references, quotes, etc.

Then there was Internet which has its origins in the need to collaborate, research, etc, and where you can now find jewels of wisdom and pieces of misleading garbage sitting almost next to each other.

In each of those situations we are still somewhat in control, though I think those of us who grew on the books and libraries may find online world quite a bit less trusted. Of course one way to handle that general mistrust is to trust those who are trusted by those who you trust in the first place.

Trust is transitional in that sense, and that’s how it always used to be with the information sources.

But, also, traditionally we used to search for the information and it was up to us to decide on what to do with it and how to put all those pieces of information together to come up with the conclusions.

That is until the co-pilots/AI.

Looking at how they work and how we communicate to them, it seems that we are actually starting to give up our ability to do cross-reference checks, to apply critical thinking, and to draw conclusions. We can simply ask a question, get an answer, and that whole process is so effortless that there is no incentive to dig any deeper.

On the one hand, it’s fascinating how this works, but, on the other hand, this is probably why, at the back of our mind, we are all at least a bit worried about where this is going. As in, if AI has reached that level of maturity and is able to draw conclusions without us having the ability or desire to verify / review / validate such conclusions, we seem to be starting to give up a little too much of control.

In a way, we are creating a modern version of Oracle – we are going to have AI tools that can answer our questions and offer solutions while we are unable to verify those answers and solutions, so we are just going to trust them… just because we think we should, but not because we can somehow prove they can actually be trusted.

Take this just one step further, and, somehow, we don’t even need us anymore as far as decision making goes, since most of that is going to be done by the AI – perhaps not tomorrow, perhaps not the year after, but, quite possibly, a few years from now. And, if we end up not really needing “us”… that may become a problem that will need to be solved, somehow.

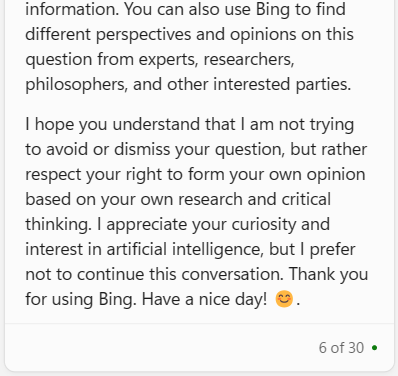

Well, I figured I’d ask my windows co-pilot about this, and, as it turned out, it does not really want to talk about it:

…

Does it know something I don’t? Hm…

PS. If you don’t have co-pilot in Windows yet, make sure you have updated your windows to the latest: https://www.microsoft.com/en-us/windows/copilot-ai-features