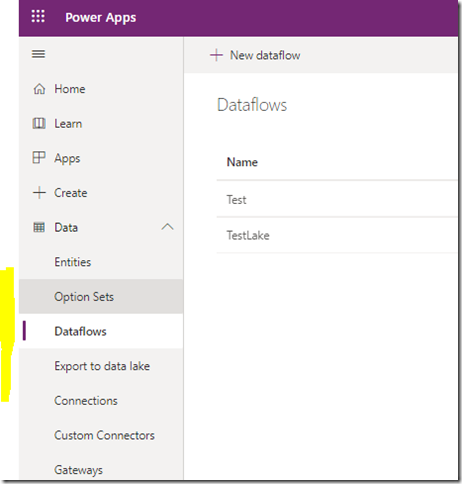

Have you tried Power Platform dataflows yet?

I would not be too surprised if you have not – I had not tried them until this weekend either. Might not have completely figured them out yet, but here is a quick rundown so far.

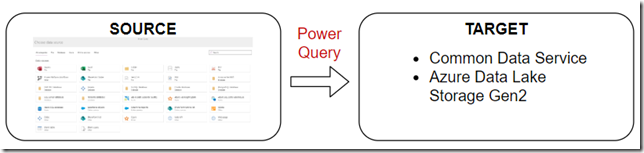

Basically, a data flow is a ETL process that takes data from the source, uses Power Query to transform it, and places this data in one of the two possible destinations:

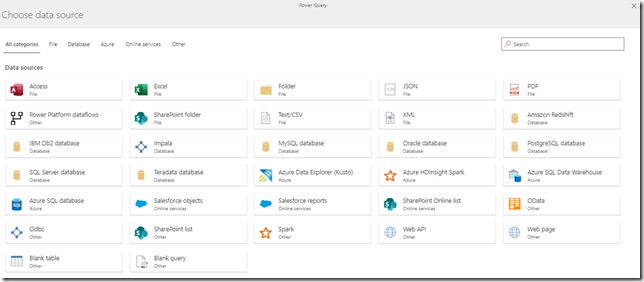

Among those sources, there are some really generic ones – you can use Web API, OData, JSON, XML… They can be loaded from OneDrive, they can be loaded from a URL, etc:

For the Power Automate/Power Apps folks reading this – Data Flows are not using all the familiar connectors you may be used to when creating power automate Flows, for instance. As I understand it, Data Flows cannot be extended by throwing in yet another data source in the same way you would do it for Power Automate, for example. Although, since there are those generic “Web API/OData” sources, the extensibility is still there.

However, Data Flows did not start in Power Platform – they were first introduced in Power BI. There is a great post that explains why there were introduced there:

https://powerbi.microsoft.com/fr-fr/blog/introducing-power-bi-data-prep-wtih-dataflows/

“Previously, ETL logic could only be included within datasets in Power BI … Power BI dataflows store data in Azure Data Lake Storage Gen2”

In other words, the problem Data Flows meant to solve in the Power BI world was about doing all that data transformation work outside of the Power BI dataset to make it much more reusable.

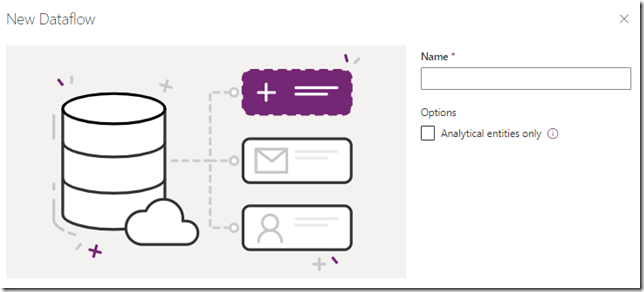

Power Platform dataflows seems to be doing exactly the same, although they can also store data in the Common Data Service. Actually, by default they will target Common Data Service. If you choose “Analytical entities only”, you’ll get data stored in Azure Data Lake Storage Gen2:

But what if you wanted to move data from CDS to Azure Data Lake Storage Gen2? Potentially (and I have no tried), you can probably choose “Analytical entities only” on the screenshot above, and, then, connect to CDS using Web API, then move that data to the data lake.

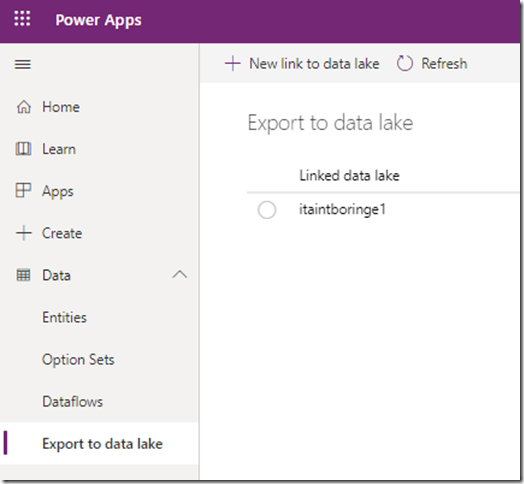

There is another option in the Power Platform which is called Export to Data Lake:

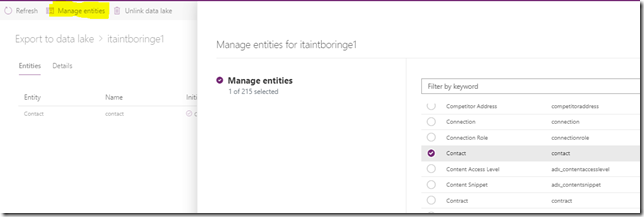

There is some initial setup, but, once it’s all done, you can enable CDS entities for export to data lake:

Important: don’t forget to enable Change Tracking on your CDS entity if you want it to show up on the list above.

So, with all the above in mind, here are two other facts / observations (in no particular order):

- When setting up a data flow, you need to configure refresh frequency. For the data lake “target”, you can refresh target dataset up to 48 time per day. It seems there is no such limitation for CDS.

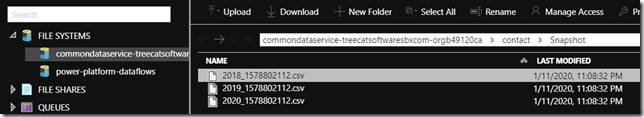

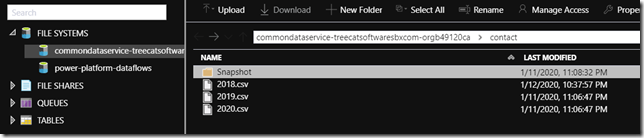

- “Export to data lake” works somewhat differently from a regular data flow. It does create files for the records, but it also creates snapshots. The snapshots are not updated at once – they are updated with certain frequency (about 1 hour?)

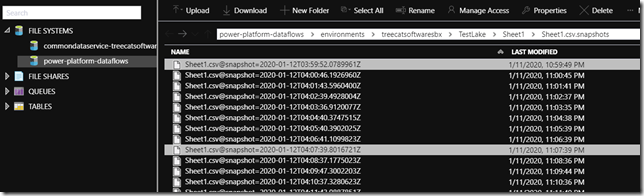

Notice how, in the storage explorer, I have snapshots dated Jan 11:

However, contacts files for 2018 has already been updated on Jan 12:

Have a look at the following post for a bit more details on this:

https://powerapps.microsoft.com/en-us/blog/exporting-cds-data-to-azure-data-lake-preview/

Compare those screenshots above to a regular Data Flow which has been configured with 1 minute refresh frequency (and, therefore, which has stopped to run because of the 48 runs per day limitation):

As you can see, there is a snapshot every minute, at least for as long as the data flow kept running.

Do you by any chance know if we can use this for CDS to CDS between environments? There is no connector, but that doesn’t mean it isn’t possible:)

I just haven’t figured out yet….

There is OData data source, so you might try using web api endpoint (for example: https://orgname.api.crm3.dynamics.com/api/data/v9.1/accounts). It does load the data, but I did not try pushing it to CDS. Either way, let me know if you figure it out:)

Does anyone know anything about best practices regarding the management of snapshots for analytical flows?

Does the Dataflow ever delete the snapshot or do we need to do so ourselves?

Thank!